Aveen Dayal, Aishwarya M., Abhilash S., C. Krishna Mohan, Abhinav Kumar, Linga Reddy Cenkeramaddi, “Adversarial Unsupervised Domain Adaptation for Hand Gesture Recognition using Thermal Images,” has been accepted for publication in the IEEE Sensors Journal (2023).

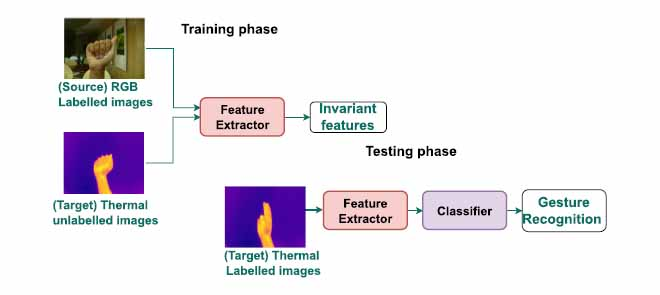

Abstract: Hand gesture recognition has a wide range of applications, including in the automotive and industrial sectors, health assistive systems, authentication, and so on. Thermal images are more resistant to environmental changes than red–green–blue (RGB) images for hand gesture recognition. However, one disadvantage of using thermal images for the aforementioned task is the scarcity of labeled thermal datasets. To tackle this problem, we propose a method that combines unsupervised domain adaptation (UDA) techniques with deep-learning (DL) technology to remove the need for labeled data in the learning process. There are several types and methods for implementing UDA, with adversarial UDA being one of the most common. In this article, the first time in this field, we propose a novel adversarial UDA model that uses channel attention and bottleneck layers to learn domain-invariant features across RGB and thermal domains. Thus, the proposed model leverages the information from the labeled RGB data to solve the hand gesture recognition task using thermal images. We evaluate the proposed model on two hand gesture datasets, namely, Sign Digit Classification and Alphabet Gesture Classification, and compare it to other benchmark models in terms of accuracy, model size, and model parameters. Our model outperforms the other state-of-the-art methods on the Sign Digit Classification and Alphabet Gesture Classification datasets and achieves 91.32% and 80.91% target test accuracy, respectively.

DOI: 10.1109/JSEN.2023.3235379

Keywords: Task analysis, Gesture recognition, Adaptation models, Feature extraction, Thermal imaging sensors, Thermal imaging camera, Data models, Training